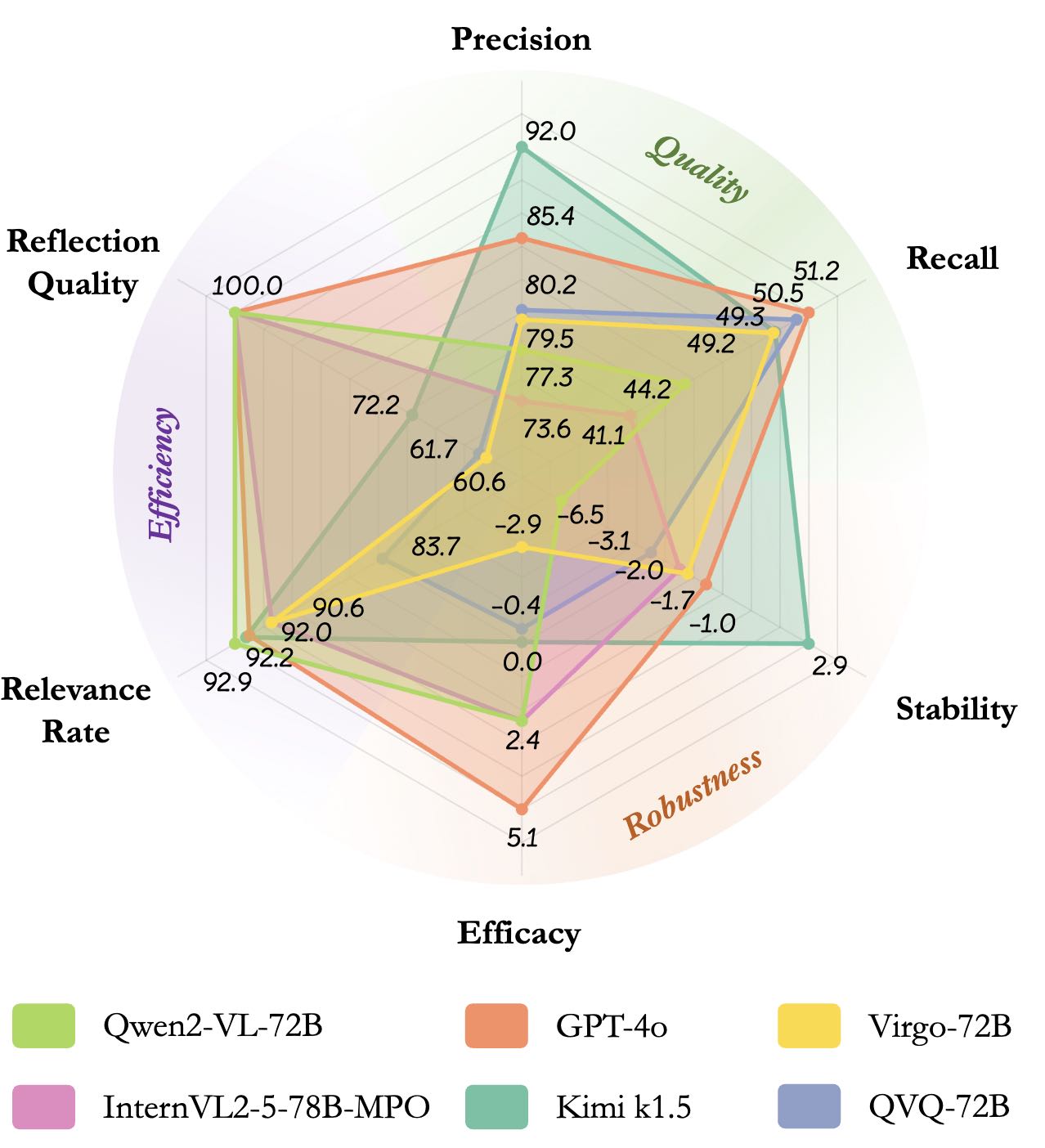

| # | Model | CoT Quality | CoT Robustness | CoT Efficiency | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| F1 Score | Precision | Recall | Avg. Score | Stability | Efficacy | Avg. Score | Relevance Rate | Reflection Quality | ||

| 1 | Kimi k1.5 🥇 | 64.2 | 92.0 | 49.3 | 1.4 | 2.9 | 0.0 | 82.2 | 92.2 | 72.2 |

| 2 | GPT-4o 🥈 | 64.0 | 85.4 | 51.2 | 2.1 | -1.0 | 5.1 | 96.0 | 92.0 | 100.0 |

| 3 | QVQ-72B 🥉 | 62.0 | 80.2 | 50.5 | -1.8 | -3.1 | -0.4 | 67.9 | 83.7 | 61.7 |

| 4 | Virgo-72B | 60.8 | 79.5 | 49.2 | -2.3 | -1.7 | -2.9 | 75.3 | 90.6 | 60.6 |

| 5 | JT-VL-Chat | 58.9 | 88.0 | 44.3 | 1.5 | -2.0 | 5.1 | 71.9 | 90.9 | 52.8 |

| 6 | Qwen2-VL-72B | 56.2 | 77.3 | 44.2 | -2.1 | -6.5 | 2.4 | 96.5 | 92.9 | 100.0 |

| 7 | InternVL2.5-78B-MPO | 52.7 | 73.6 | 41.1 | 0.2 | -2.0 | 2.4 | 95.3 | 90.6 | 100.0 |

| 8 | InternVL2.5-8B-MPO | 43.0 | 60.4 | 33.4 | 0.6 | 0.3 | 0.9 | 94.7 | 89.3 | 100.0 |

| 9 | Qwen2-VL-7B | 42.1 | 61.6 | 32.0 | -4.0 | -3.1 | -4.8 | 94.9 | 89.8 | 100.0 |

| 10 | InternVL2.5-8B | 41.1 | 60.0 | 31.3 | -3.0 | -6.8 | 0.9 | 98.4 | 96.8 | 100.0 |

| 11 | MiniCPM-V-2.6 | 39.8 | 57.3 | 30.5 | -3.5 | -4.8 | -2.2 | 92.8 | 85.7 | 100.0 |

| 12 | LLaVA-OV-72B | 36.3 | 57.3 | 26.6 | -0.2 | 0.3 | -0.6 | 95.4 | 90.8 | 100.0 |

| 13 | LLaVA-CoT | 34.9 | 53.9 | 25.8 | 0.4 | 1.4 | -0.6 | 94.0 | 88.1 | 100.0 |

| 14 | LLaVA-OV-7B | 30.9 | 50.9 | 22.2 | -3.4 | -3.8 | -3.0 | 91.5 | 83.0 | 100.0 |

| 15 | Mulberry | 27.4 | 59.1 | 17.8 | 3.5 | 4.4 | 2.6 | 89.5 | 79.0 | 100.0 |

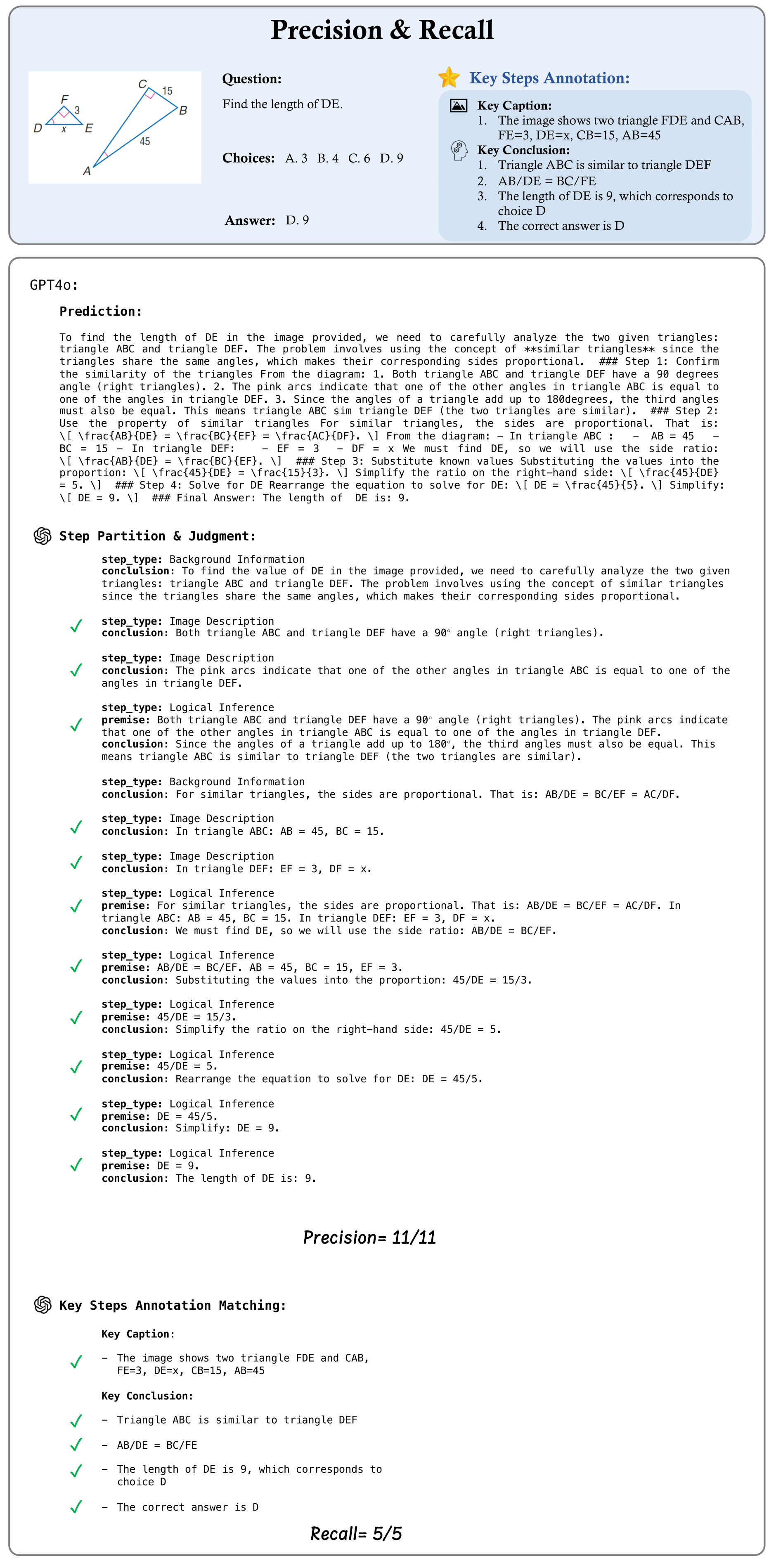

Introduction

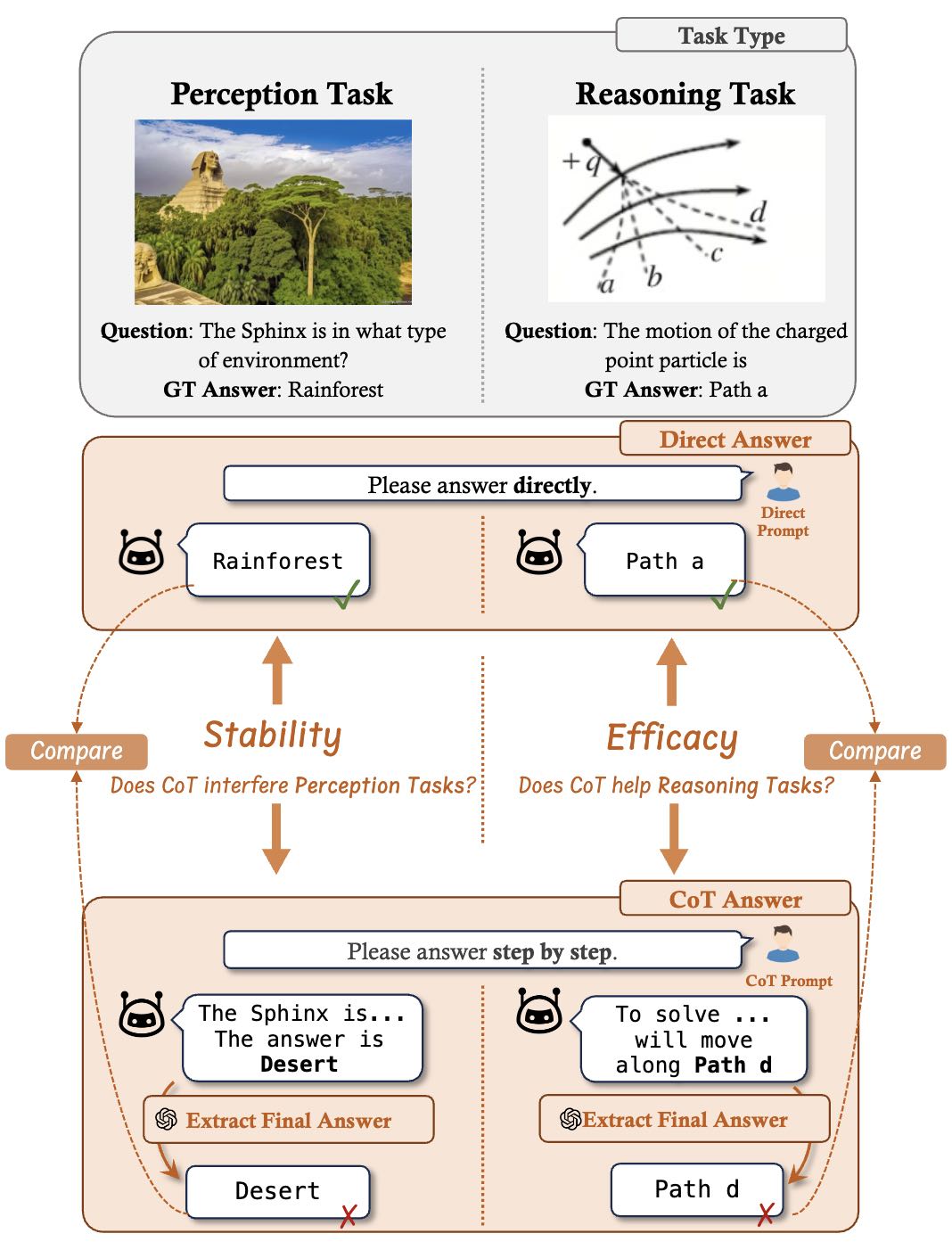

Answering questions with Chain-of-Thought (CoT) has significantly enhanced the reasoning capabilities of Large Language Models (LLMs), yet its impact on Large Multimodal Models (LMMs) still lacks a systematic assessment and in-depth investigation.

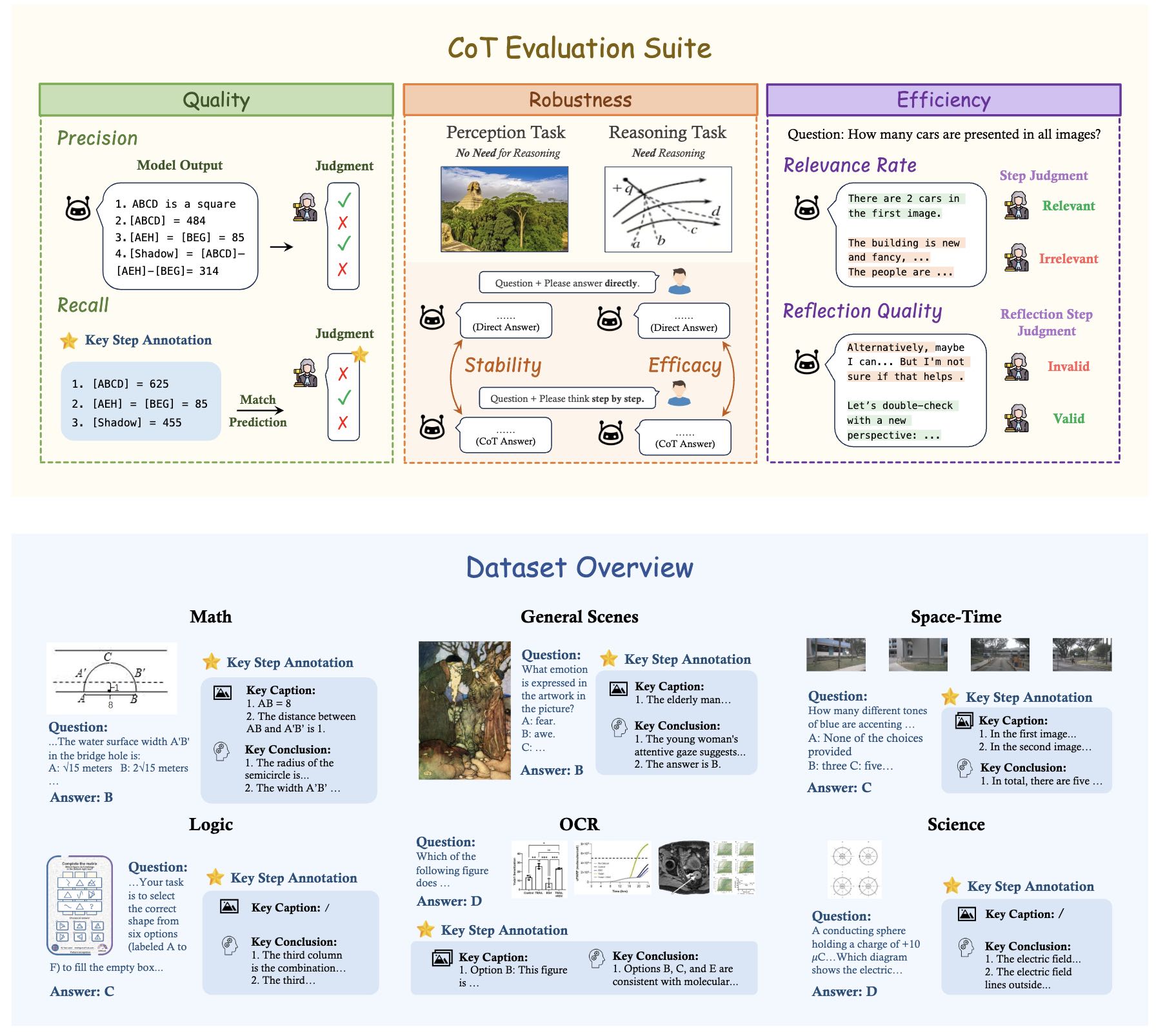

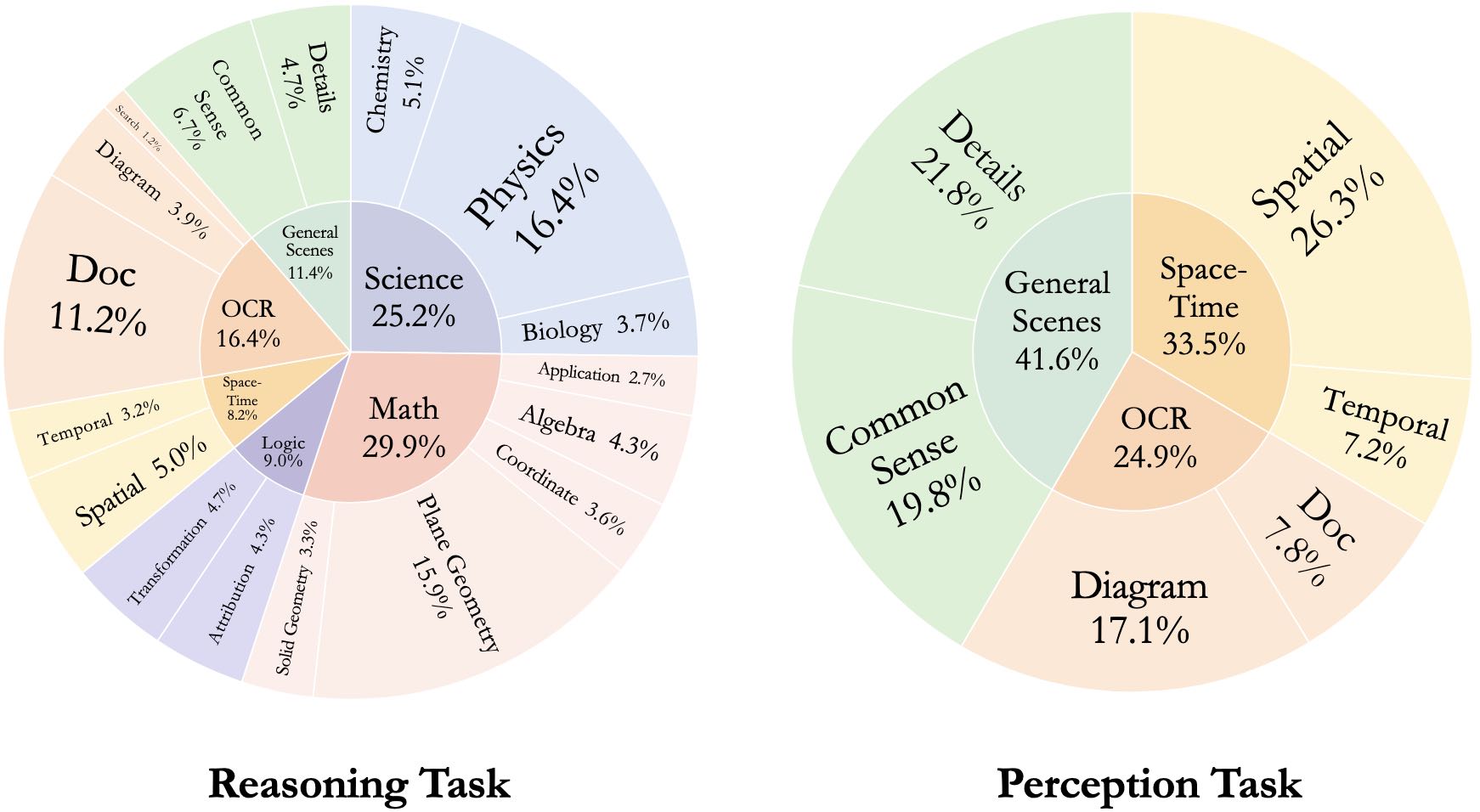

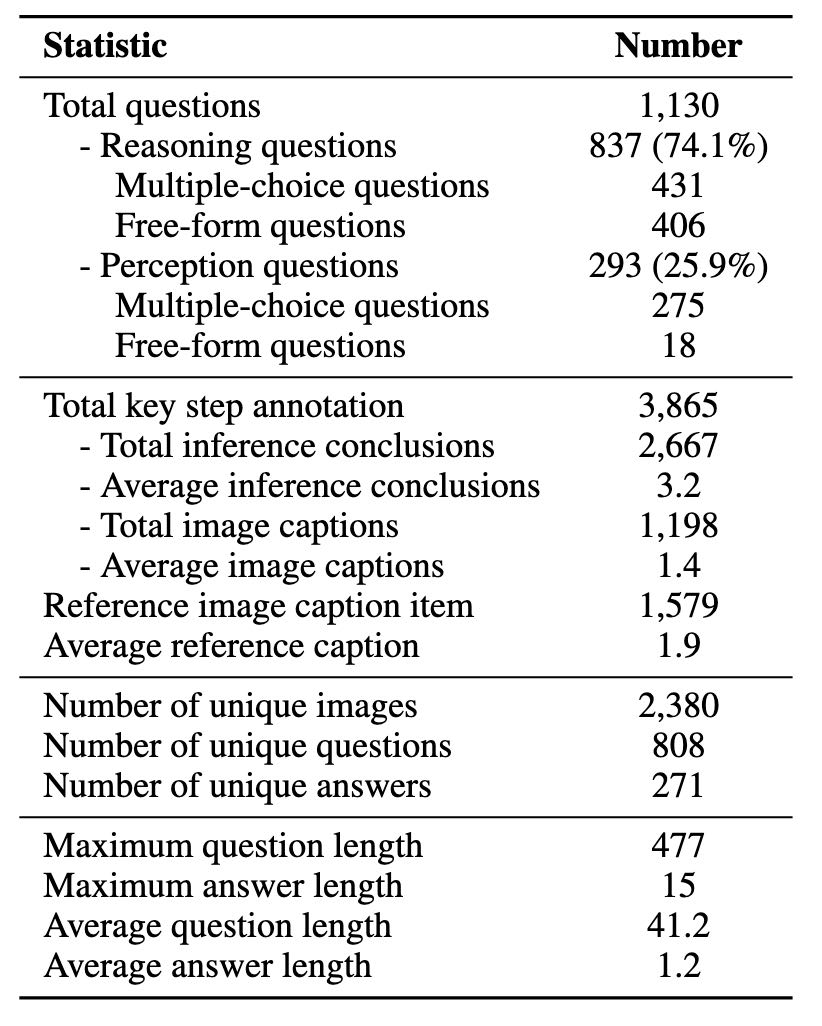

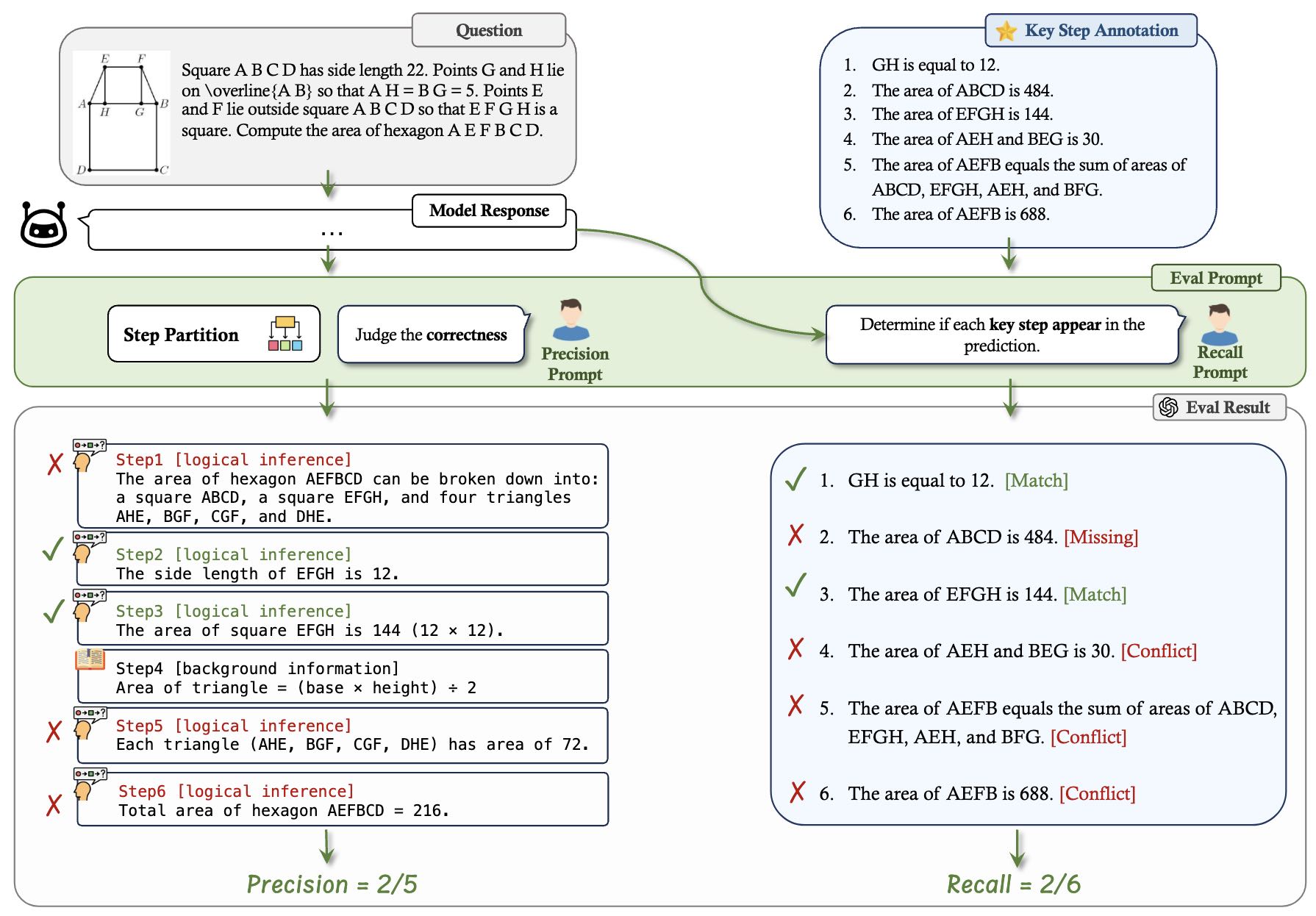

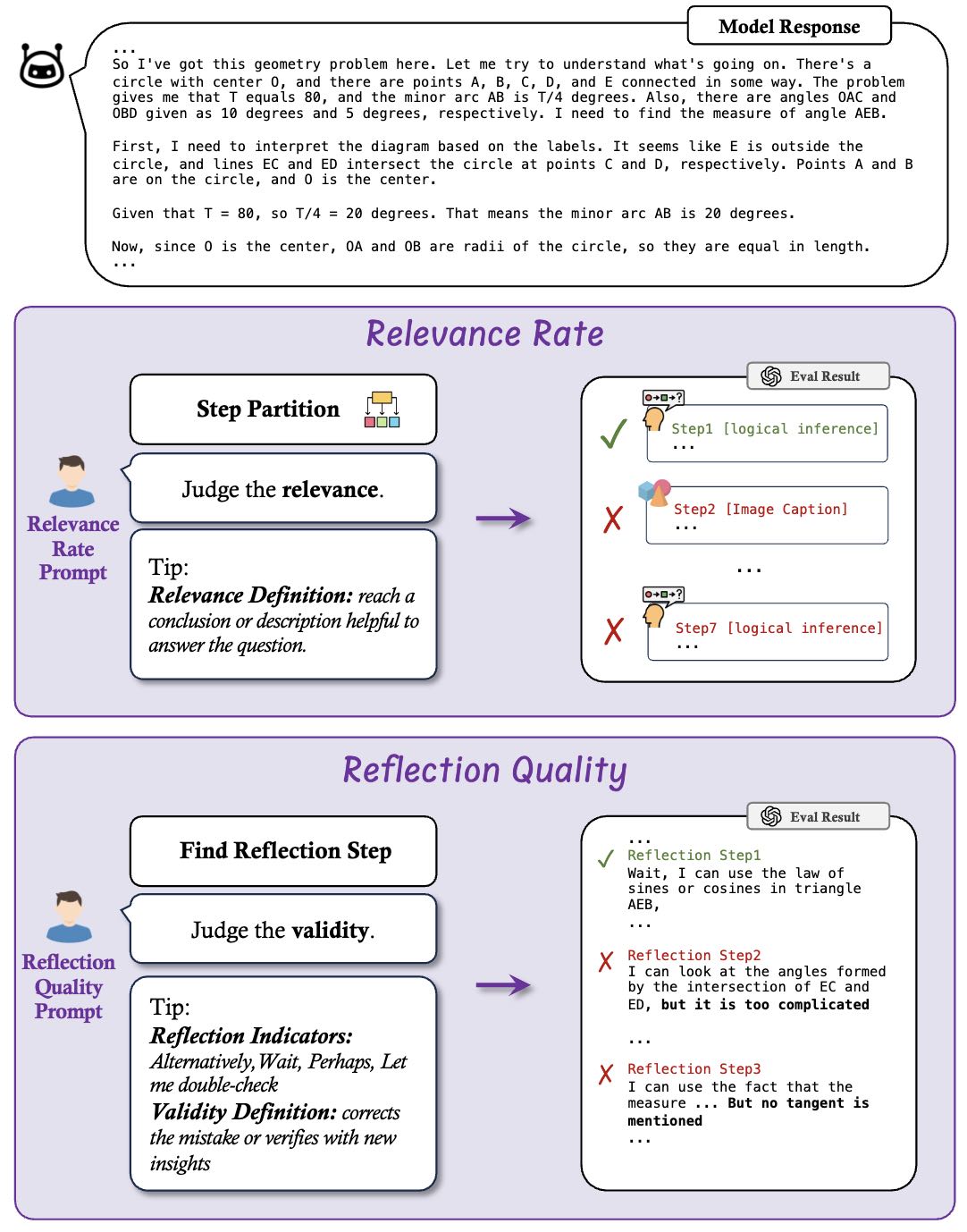

In this paper, we introduce MME-CoT , a specialized benchmark evaluating the CoT reasoning performance of LMMs, spanning six domains: math, science, OCR, logic, space-time, and general scenes. As the first comprehensive study in this area, we propose a thorough evaluation suite incorporating three novel metrics that assess the reasoning quality, robustness, and efficiency at a fine-grained level.

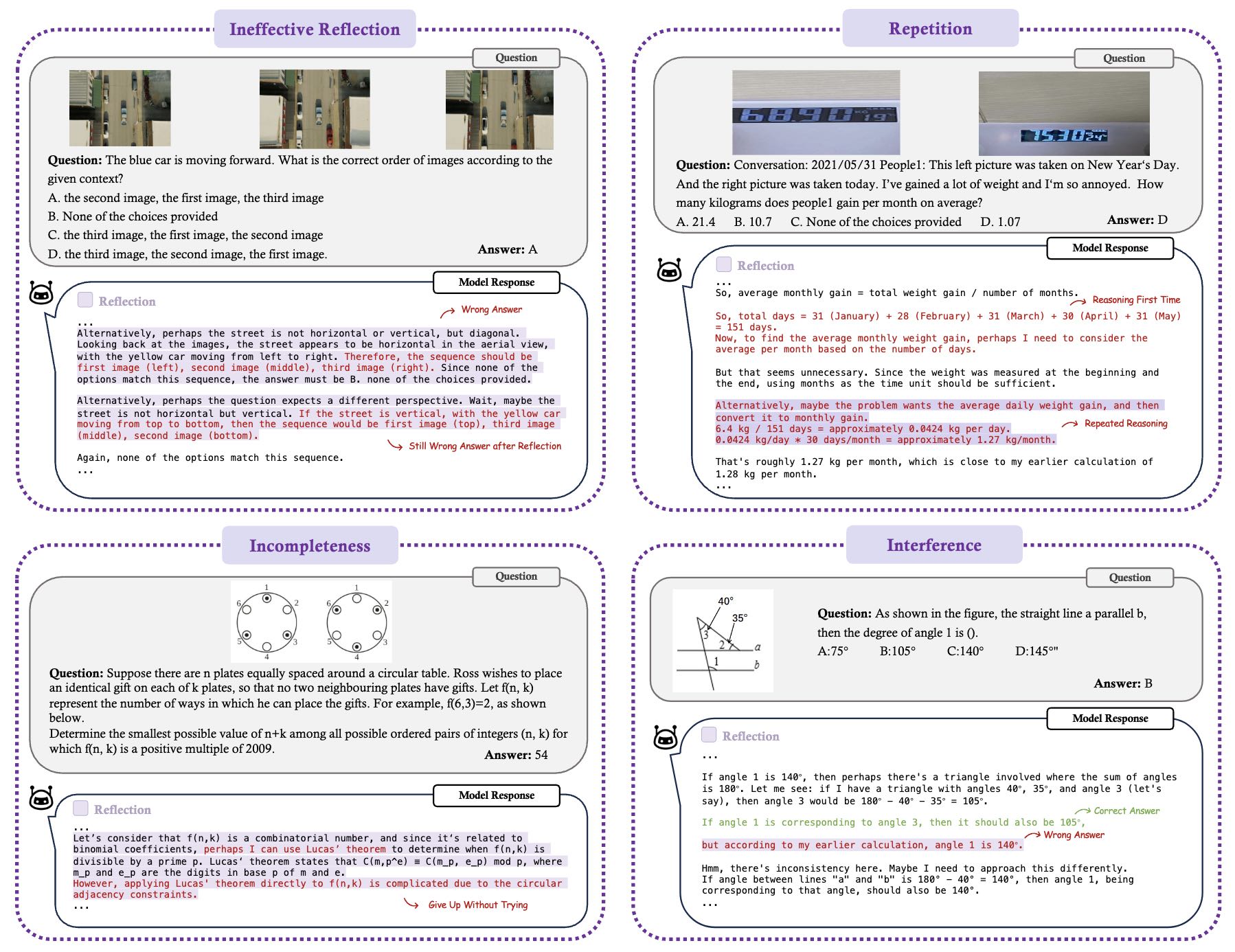

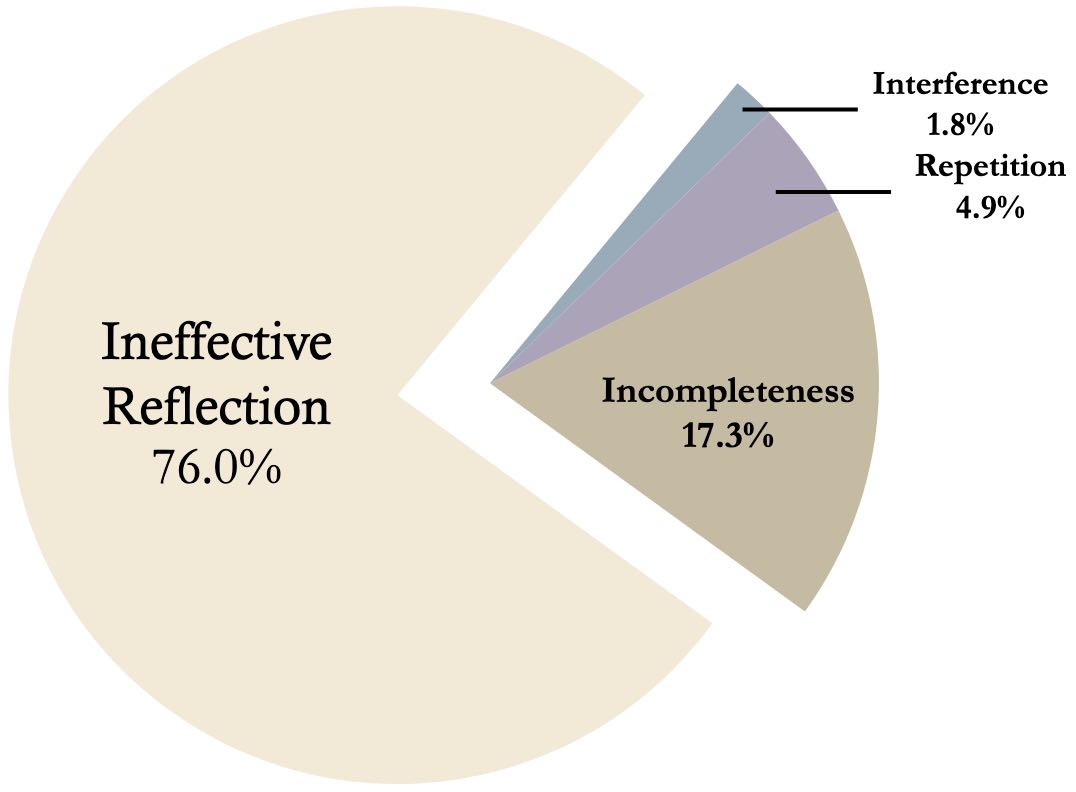

Leveraging curated high-quality data and a unique evaluation strategy, we conduct an in-depth analysis of state-of-the-art LMMs, uncovering several key insights: (1) Models with reflection mechanism demonstrate a superior CoT quality, with Kimi k1.5 outperforming GPT-4o and demonstrating the highest quality results; (2) CoT prompting often degrades LMM performance on perception-heavy tasks, suggesting a potentially harmful overthinking behavior; (3) Although the CoT quality is high, LMMs with reflection exhibit significant inefficiency in both normal response and self-correction phases. We hope MME-CoT serves as a foundation for advancing multimodal reasoning in LMMs.

Chain-of-Thought Performance of Leading LMMs on MME-CoT.